In the sizeable panorama of the internet, in which engines like google move slowly and index websites, the Robots.txt File plays a pivotal position in guiding these digital explorers. A well-dependent Robots.txt File report can extensively impact how your website’s content material is accessed and displayed in search engine consequences. In this manual, we are able to delve into the tricky artwork of putting in place the precise robots.Txt record to ensure best visibility, manage, and indexing of your net pages.

Understanding the Robots.txt File

The robots.Txt record, a simple text document dwelling inside the root listing of a internet site, serves as a roadmap for web crawlers, instructing them which components of the web site must be crawled and which have to be avoided. This file acts as a gatekeeper, directing search engine bots and different computerized dealers, consisting of internet crawlers, away from touchy or irrelevant content material.

Basic Syntax and Rules

Creating a robots.Txt file document demands adherence to a specific syntax. Each set of directives is structured as follows:

User-agent: [User-agent name]

Disallow: [URL path]The User-agent line indicates the name of the net crawler or user agent that the directives follow to. The Disallow line designates the URLs that need to not be crawled. To supply access to a particular location, you can use the Allow directive.

Fine-Tuning Access

Webmasters often require varying degrees of get admission to manipulate for exclusive sections in their website. For instance, you can need to save you engines like google from indexing non-public or touchy facts, login pages, or replica content material. Using wildcard characters like asterisks (*) can useful resource in setting broader regulations to exclude entire sections or patterns of URLs.

Goal:

To properly create or optimize your robots.txt file.

Ideal Outcome: You have an excellent robots.txt file on your website that allows search engines to index your website exactly as you want them to.

Pre-requisites or requirements:

- You need to have access to the Google Search Console property of the website you are working on. If you don’t have a Google Search Console property setup yet you can do so by following SOP 020.

Why this is important: Your robots.txt file sets the fundamental rules that most search engines will read and follow, once they start crawling your website. They tell search engines which parts of your website you don’t want (or they don’t need) to crawl.

Where this is done: In a text editor, and in Google Search Console. If you are using WordPress, in your WordPress Admin Panel as well.

When this is done: Typically you would audit your robots.txt at least every 6 months to make sure it is still current. You should have a proper robots.txt whenever you start a new website.

Who does this: The person responsible for SEO in your organization.

Auditing your current Robots.txt file

1.Open your current robots.txt file in your browser.

a. Note:You can find your robots.txt by going to ‘http://yourdomain.com/robots.txt’. Replace “http://yourdomain.com” with your actual domain name.

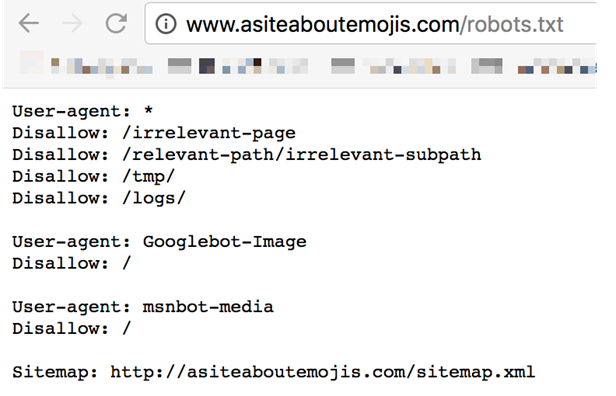

i. Example: http://asiteaboutemojis.com/robots.txt

b. Note 2: If you can’t find your robots.txt it could be the case that there is no robots.txt in that case jump to the “Creating a Robots.txt File” chapter of this SOP.

2.Tick the following cheat-sheet and fix each issue if it exists:

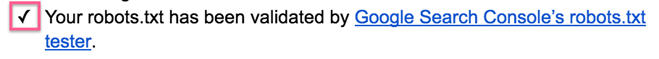

🗹 Your robots.txt has been validated by Google Search Console’s robots.txt file tester.

i. Note: If you are not sure on how to use the tool, you can follow the last chapter of this SOP, and then get back to the current chapter.

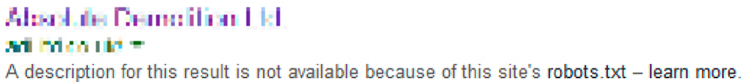

🗹 Do not try to remove pages from Google Search Results by using the robots.txt only.

i. Example: If you don’t want “http://asiteaboutemojis.com/passwords” to appear in the search results, blocking “/passwords” will not result on that page being removed from Google. Your results will likely remain indexed and this message will be displayed instead:

🗹 Your robots.txt is disallowing unimportant system pages from being crawled

i. Example: default pages, server logs, etc

🗹 You are disallowing sensitive data from being crawled.

i. Example: Internal documents, Customer’s Data, etc.

ii. Remember: Blocking access in robots.txt file is not enough to avoid those pages from being indexed. In the case of sensitive data, not only should those pages/files be removed from search engines but they should be password protected. Attackers frequently read the robots.txt file to find confidential information and if there is no other protection in place nothing stops them from stealing your data.

🗹 You are not disallowing important scripts that are necessary to render your pages correctly.

i. Example: Do not block Javascript files that are necessary to render your content correctly.

- To make sure you didn’t miss any page after this checklist, perform the following test:

i. Open Google on your browser (the version relevant for the country that you are targeting, for instance google.com).

ii. Google “Site:yourdomain.com” (replace yourdomain.com with your real domain name).

Example:

iii. You will be able to see the search results for that domain that are indexed by Google. Glance through as much of them as you can to find results that do not meet the guidelines of the checklist.

Creating a Robots.txt File

- Create a .txt document on your computer.

- Here are two templates you can copy straight away if they fit what you are looking for:

a. Disallowing all crawlers from crawling your entire website:

Important: This will block Google from crawling your entire website. This can severely damage your search engine rankings. Only in very rare circumstances do you want to do this. For instance if it is a staging website, or if the website is not meant for the public.

Note: Don’t forget to replace “yoursitemapname.xml” with your actual sitemap URL.

Note 2: If you don’t have a Sitemap yet you can follow SOP 055

User-agent: *

Disallow: /

Sitemap: http://yourdomain.com/yoursitemapname.xml

b. Allowing all crawlers to crawl your entire website:

Note: It’s ok to have this robots.txt file , even though it won’t change the way search engines crawl your website, since by default they assume they can crawl everything that is not blocked by a “Disallow” rule.

Note 2: Don’t forget to replace “yoursitemapname.xml” with your actual sitemap URL.

User-agent: *

Allow: /

Sitemap: http://yourdomain.com/yoursitemapname.xml

- Typically those two do not fit your case. You will usually want to allow all robots, but you only want them to access specific paths of your website. If that is the case:

To block a specific path (and respective sub-paths):

i. Start by adding the following line to it:

User-agent: *

ii. Block paths, subpaths, or filetypes that you don’t want crawlers to access by having an extra line:

a. For paths: Disallow: /your-path

■ Note: Replace “your-path” with the path that you want to block. Remember that all sub-paths bellow that path will be blocked as well.

■ Example: “Disallow: /emojis” will disallow crawlers from accessing “asiteaboutemojis.com/emojis” but will also disallow them from accessing “asiteboutemojis.com/emojis/red”

b. For filetypes: Disallow: /.filetype$ ■ Note: Replace “filetype” with the filetype that you want to block. ■ Example: “Disallow: /.pdf$ will disallow crawlers from accessing “asiteaboutemojis.com/emoji-list.pdf”

To block specific crawlers from crawling your website

i. Add a new line at the end of your current Robots.txt File:

Note: Don’t forget to replace “Emoji Crawler” with the actual crawler you want to block. You can find a list of active crawlers here and see if you want to block any of them specifically. If you don’t know what this is you can skip this step.

a. User-agent: Emoji Crawler

b. Disallow: /

Example: To block Google Images’ crawler: a. User-agent: Googlebot-Image

b. Disallow: /

Adding the Robots.txt File to your website

This SOP covers two solutions for adding a robots.txt to your website:

● If you are using WordPress on your website and running the Yoast SEO Plugin click here.

○ Note: If you don’t have Yoast installed on your WordPress website you can do it by following that specific chapter on SOP 008 by clicking here.

○ Note 2: If you don’t want to install Yoast on your website, the procedure below (using FTP or SFTP) will also work for you.

● If you are using another platform, you can upload your robots.txt through FTP or SFTP. This procedure is not covered by this SOP. If you don’t know or don’t have access to upload files to your server you can ask your web-developer or the company that developer your website to do so. Here is a template you can use to send over email:

“Hi,

I’ve created a robots.txt file to improve the SEO of the website. You can find the file attached to this email. I need it to be placed in the root of the domain and it should be named “robots.txt”.

You can find here the technical specifications for this file in case you need any more information: https://developers.google.com/search/reference/robots_txt

Thank you.“

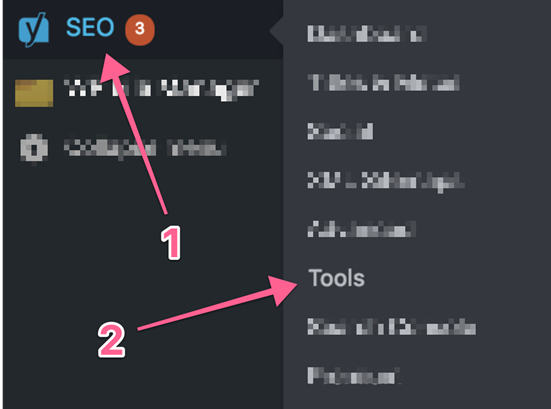

Adding your robots.txt file through the Yoast SEO plugin:

- Open your WordPress Admin.

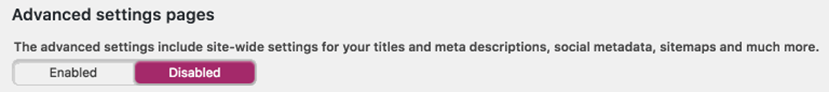

- Click “SEO” → “Tools”.

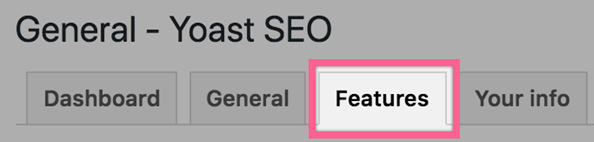

Note: If you don’t see this option, you will need to enable “Advanced features” first:

- Click “SEO” → “Dashboard” → “Features”

- Click “SEO” → “Dashboard” → “Features”

- Click “Save changes”

- Click “File Editor”:

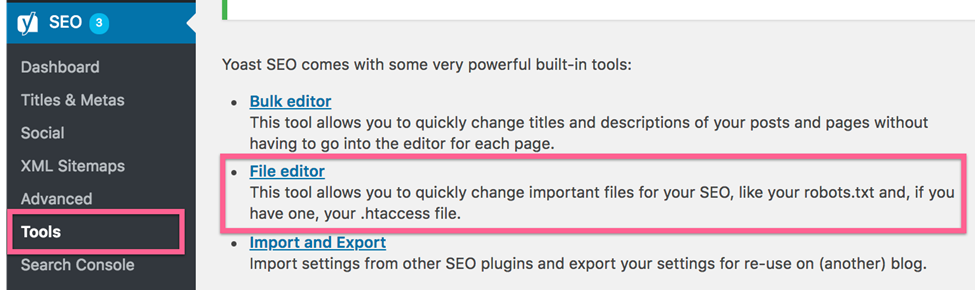

- You will see an input text box where you can add or edit the text of your robots.txt file → Paste the content of the robots.txt file you have just created → Click “Save Changes to Robots.txt File”.

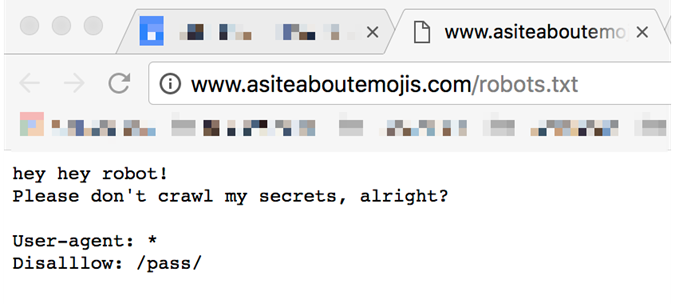

- To make sure everything went perfectly, on your browser open: “http://yoursite.com/robots.txt”.

a. Note: Don’t forget to replace “http://yoursite.com” with your domain name.

i. Example: http://asiteaboutemojis.com/robots.txt - You should be able to see your robots.txt live on your website:

a. Example: http://www.asiteaboutemojis.com/robots.txt

Validating your Robots.txt File

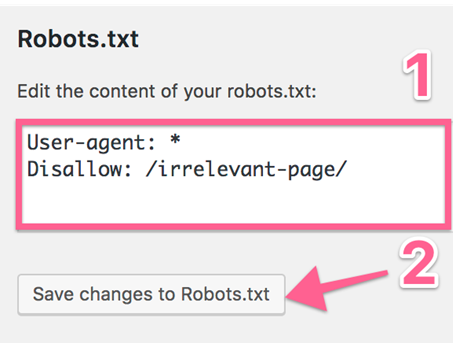

- Open Google Search Console’sRobots.txt File tester.

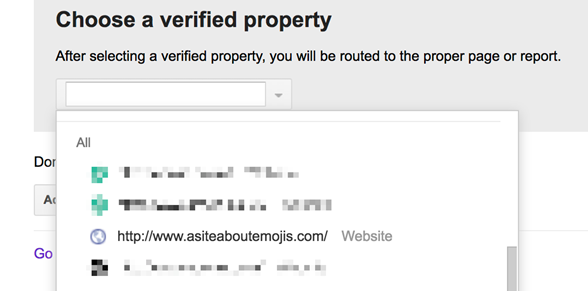

- If you have multiple properties in that Google Account select the right one:

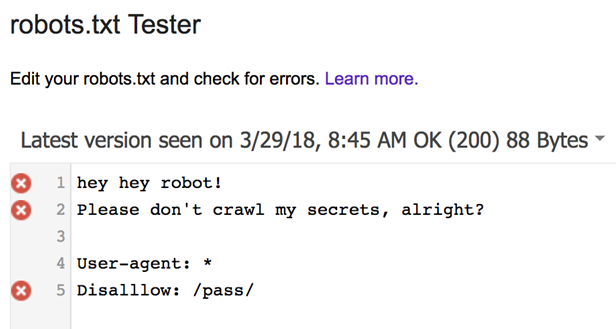

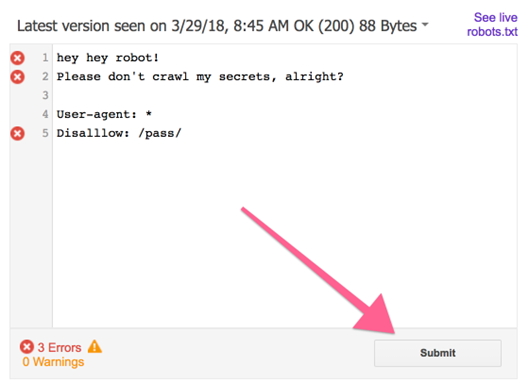

- You will be sent to the Robots.txt File Tester tool where you will see the last robots.txt file that Google crawled.

- Open your actual robots.txt and make sure the version that you are seeing in Google Search Console’s tool is exactly the same as the current version you have live on your domain.

a. Note: You can find your robots.txt file by going to ‘http://yourdomain.com/robots.txt’. Replace “http://yourdomain.com” with your actual domain name.

i. Example: http://asiteaboutemojis.com/robots.txt

b. Note: If that is not the case, and you’re seeing an outdated version on GSC (Google Search Console):

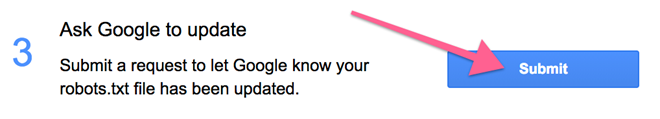

i. click “Submit” in the bottom right corner:

ii. Click “Submit” again:

iii. Refresh the page by pressing Ctrl+F5 (PC) or Cmd ⌘+R (Mac)

- If there are no syntax errors or logic warnings on your file you will see this message:

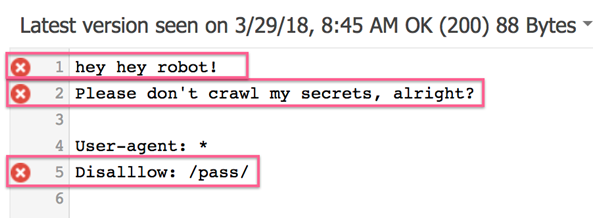

Note: If there are errors or warnings they will be displayed on the same spot:

Note 2: You will also see symbols next to each of the lines that are generating those errors:

If that is the case make sure you followed the guidelines laid out on this SOP or if your file is following Google’s guidelines here.

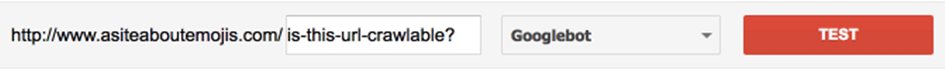

- If no issues were found on your Robots.txt File Test your URLs by adding them to the text input box and clicking “Test”:

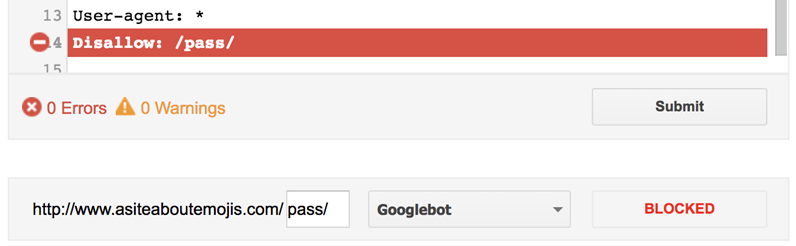

Note: If your URL is being blocked by your robots.txt you will see a “Blocked” message instead, and the tool will highlight the line that is blocking that URL:

Sitemaps and Robots.Txt Collaboration

While robots.Txt file is primarily worried with get entry to manage, sitemaps facilitate efficient crawling and indexing. Sitemaps provide search engines with a complete blueprint of your website online’s structure, assisting them in locating and indexing content greater successfully. It is crucial to observe that robots.Txt and sitemaps are complementary equipment, each serving a awesome reason.

Best Practices

Thorough Testing: Implementing a robots.Txt file need to be accompanied with the aid of rigorous checking out to ensure it features as meant. Webmaster gear supplied via search engines like google can help in figuring out any discrepancies.

Regular Updates: As your website evolves, so need to your Robots.txt file record. Regularly replace it to house new content, sections, or adjustments for your website’s structure.

Respect Standards: Adhere to the pointers set with the aid of the Robots Exclusion Standard. Misconfigured robots.Txt documents can inadvertently block essential pages from seek engine crawlers.

User-agent Specific Directives: Tailor directives to precise person sellers, if needed. This degree of granularity guarantees that one-of-a-kind varieties of bots are handled correctly.

Conclusion

In the intricate dance between web sites and engines like google, the robots.Txt file report stands as a essential tool for site owners to wield manage over their website online’s indexing and accessibility. By comprehending the nuances of this unassuming text document and employing it judiciously, you may orchestrate a harmonious dating among your website and the digital realm, ensuring your content material garners the visibility it rightfully deserves.

Discover the art of crafting an ideal robots.txt file and enhance your SEO game. Connect with Hexdigitalplanet, a Digital Marketing Agency in India, for top-notch SEO services and expert guidance. Your journey towards online success starts here.